Join Us

We believe that exploring the intricacies of computer-based perception benefits significantly when curious and enthusiastic individuals from various backgrounds collaborate within a welcoming and inclusive environment. VISURG reinforces this environment by actively seeking imaginative and inquisitive individuals who possess an interest in Machine Learning and Computer Vision, drawing from a wide array of scientific fields. Our members represent diverse backgrounds, including Computer Science, Medical Imaging, Robotics, Signal Processing, and more. If the prospect of becoming part of a supportive and diverse team that enjoys developing cutting-edge methods excites you, get in touch!

Applying for a PhD

You can explore our current PhD openings below. Our archive of previous positions is also visible for you to get a sense of the roles we typically offer. In addition to the offered positions, we also accept talented PhD candidates who have secured personal scholarships (e.g. K-CSC).

If you are considering applying for a PhD in our group, the application process is outlined below:

-

Kindly reach out to us via email to express your interest in pursuing a PhD with us. Additionally, provide information about your desired research topic, specifying whether it aligns with one of the projects announced on this website or if it is a different one.

-

Submit an online application via King’s Apply. For Programme Name, enter

Computer Science Research MPhil/PhD. In the Research Proposal area, mention the project title (see projects below) and specify Dr. Garcia Peraza Herrera as your intended supervisor. The information on what you need to provide for this application can be found here. -

Each PhD Studentship below may have specific additional application steps; please follow them accordingly.

Shortlisted applicants will receive a machine learning challenge (via e-mail) that they will have to solve, and whose results they will be invited to present in an interview for assessment of their research potential and the contributions they can make to the research activities of the group.

Open fully-funded PhD studentships

-

PhD project: Self-Supervised Foundation Models for Video Panoptic Understanding

Application

- Deadline: 1st January 2026, 23:59 UK time.

- Application steps: please follow the guidelines here.

Details

- Primary supervisor: Dr. Garcia Peraza Herrera

- Start date: October 2026

- Eligibility: Open to students elegible for K-CSC only.

Project overview

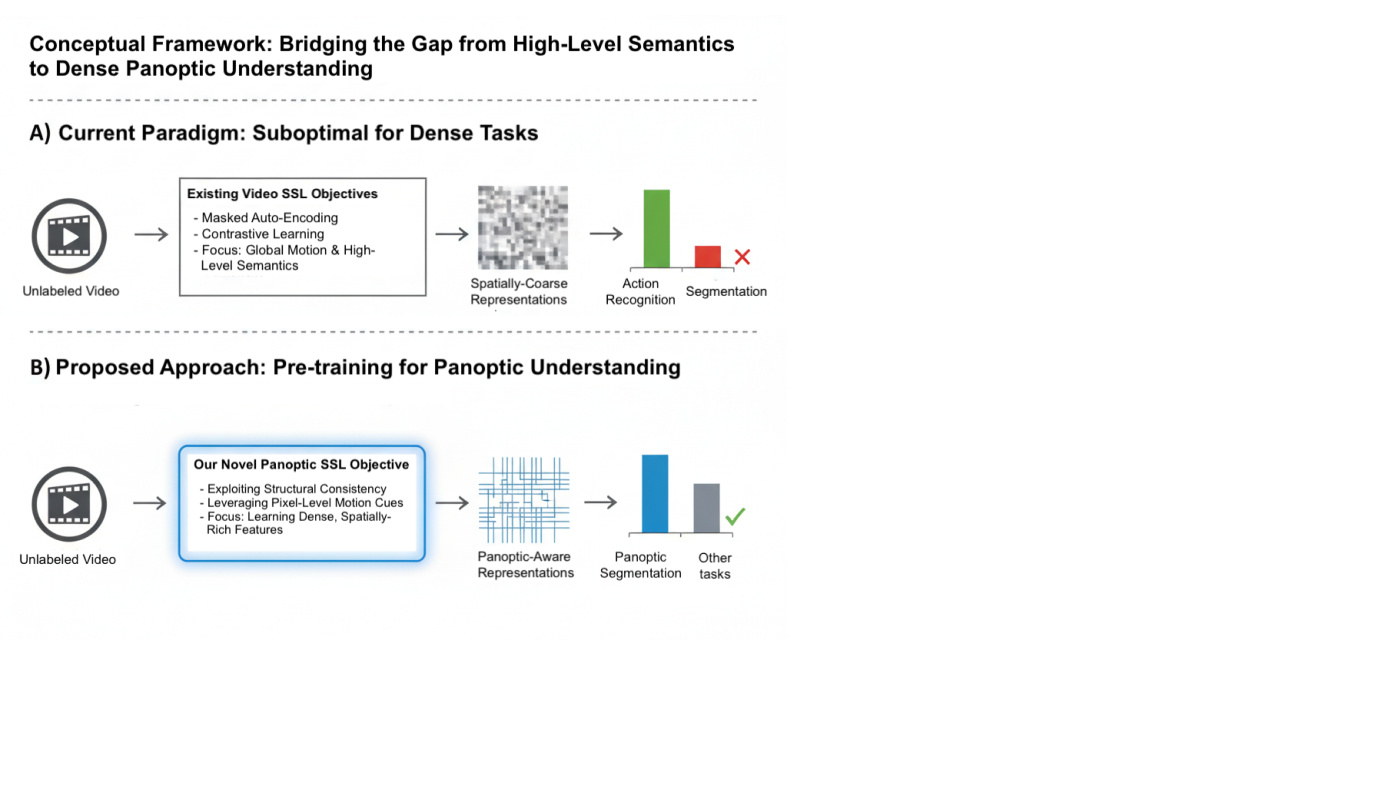

The development of foundation models has transformed AI, and video is the next frontier. Video Foundation Models (ViFMs) have shown great promise in high-level tasks like action recognition and text-based retrieval. However, there remains an unmet need for powerful, general-purpose representations that can support dense, pixel-level understanding tasks such as panoptic segmentation.

While self-supervised learning (SSL) has become the dominant paradigm for pre-training visual models without relying on labeled data, current SSL objectives are suboptimal for tasks requiring fine-grained, pixel-level understanding. Existing pretext tasks [1] focus on capturing motion and high-level semantics, making them unsuitable for panoptic segmentation.

Despite the importance of panoptic segmentation, there is no widely adopted self-supervised pre-training strategy specifically designed to learn representations tailored to this task in the video domain. This project aims to bridge this gap by developing a novel SSL objective that leverages the inherent structure and motion in unlabeled videos as a supervisory signal for learning panoptic representations.

Figure 1. Conceptual framework contrasting our proposed pre-training strategy with the current paradigm.

The initial goal of this project will be to design a new video foundation model using a novel self-supervised pre-training strategy, tailored to the specific requirements of panoptic segmentation. Our approach will focus on exploiting the structural information present in videos to learn rich, pixel-level representations that support complex scene understanding tasks.

References

[1] Zhao et al., “VideoPrism: A Foundational Visual Encoder for Video Understanding”, ICML 2024.

Closed positions

-

PhD Project: Improving Active Learning Strategies for Limited Annotation Budgets

-

PhD Project: Synthetic Video Generation with Counterfactual Explanations

-

PhD Project: Few-shot Learning for Panoptic Segmentation