Join Us

We believe that exploring the intricacies of computer-based perception benefits significantly when curious and enthusiastic individuals from various backgrounds collaborate within a welcoming and inclusive environment. VISURG reinforces this environment by actively seeking imaginative and inquisitive individuals who possess an interest in Machine Learning and Computer Vision, drawing from a wide array of scientific fields. Our members represent diverse backgrounds, including Computer Science, Medical Imaging, Robotics, Signal Processing, and more. If the prospect of becoming part of a supportive and diverse team that enjoys developing cutting-edge methods excites you, get in touch!

Applying for a PhD

You can explore our current PhD openings below. Our archive of previous positions is also visible for you to get a sense of the roles we typically offer. In addition to the offered positions, we also accept talented PhD candidates who have secured personal scholarships (e.g. K-CSC).

If you are considering applying for a PhD in our group, the application process is outlined below:

-

Kindly reach out to us via email to express your interest in pursuing a PhD with us. Additionally, provide information about your desired research topic, specifying whether it aligns with one of the projects announced on this website or if it is a different one.

-

Submit an online application via King’s Apply. For Programme Name, enter

Computer Science Research MPhil/PhD. In the Research Proposal area, mention the project title (see projects below) and specify Dr. Garcia Peraza Herrera as your intended supervisor. The information on what you need to provide for this application can be found here. -

Each PhD Studentship below may have specific additional application steps; please follow them accordingly.

Shortlisted applicants will receive a machine learning challenge (via e-mail) that they will have to solve, and whose results they will be invited to present in an interview for assessment of their research potential and the contributions they can make to the research activities of the group.

Open fully-funded PhD studentships

-

PhD Project: Synthetic video generation with counterfactual explanations

Application

- Additional application steps: in addition to the two application steps stated above, please also send an email to [email protected] indicating your intention to apply for the project titled “Synthetic video generation: counterfactual explanations” funded and supervised by Dr. Luis C. Garcia Peraza Herrera from the Department of Informatics, Faculty of Natural, Mathematical & Engineering Sciences.

Details

- Project title: Synthetic video generation with counterfactual explanations

- Primary supervisor: Dr. Garcia Peraza Herrera

- Funding type: 3.5 years

- Tax-free stipend: £1,718.5 per month (inclusive of London weighting)

- Research training and support: £1000 per year to cover research costs and travel

- Start date: October 2024

- Eligibility: Open to UK and International students

Project overview

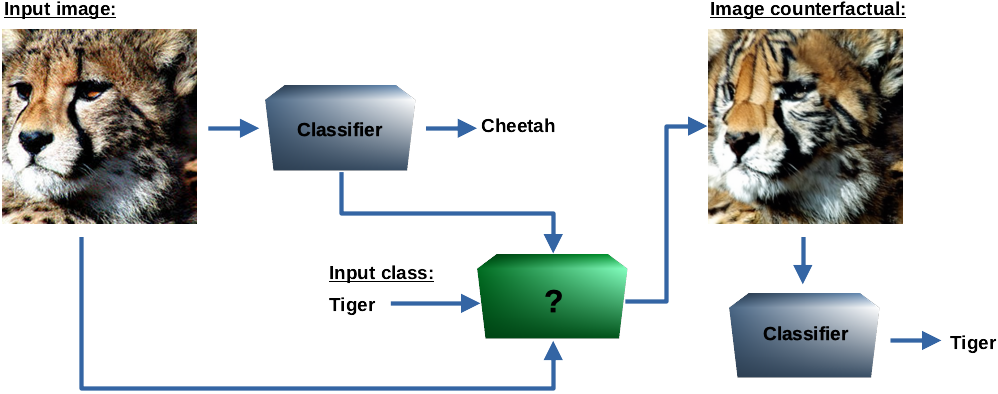

Counterfactual explanations provide valuable insights into machine learning models. They reveal the minimum changes required in the input to yield a different output, as illustrated in Fig. 1 below. In the case of deep learning models using images as input [1, 2], the counterfactual explanation is also presented as an image:

Figure 1. Image counterfactual explanation.

The objective of this project is to extend this concept to video data. Instead of dealing with static images, we aim to devise machine learning methods (represented by [ ? ] in Fig. 1 above) for generating video counterfactual explanations.

A video counterfactual explanation:

- Minimally alters a given input video.

- Causes the video classifier to predict a different and specific class

compared to the original input video.

Although our project will focus on developing methods to create video counterfactual explanations specifically tailored to video classifiers, these methods can potentially be applied to other domains as well (e.g. understanding why autonomous robotic systems predict certain actions based on video input).

This computer vision technology has several applications in the medical domain, particularly in the surgical field. The ability to generate synthetic videos can serve to create synthetic datasets for training deep learning models and develop simulators that replicate surgical scenarios, offering clinicians a platform for sharpening their surgical skills.

References

[1] Boreiko et al. Sparse Visual Counterfactual Explanations in Image Space, DAGM GCPR, 2022.

[2] Augustin et al. Diffusion visual counterfactual explanations, NeurIPS, 2022.

-

PhD project: Improving active learning strategies for limited annotation budgets

Application

- Additional application steps: in addition to the two application steps stated above, please also send an email to [email protected] indicating your intention to apply for the project titled “Improving active learning strategies for limited annotation budgets” funded and supervised by Dr. Luis C. Garcia Peraza Herrera from the Department of Informatics, Faculty of Natural, Mathematical & Engineering Sciences.

Details

- Project title: Improving active learning strategies for limited annotation budgets

- Primary supervisor: Dr. Garcia Peraza Herrera

- Start date: October 2024

- Eligibility: Open to UK and International students

Project overview

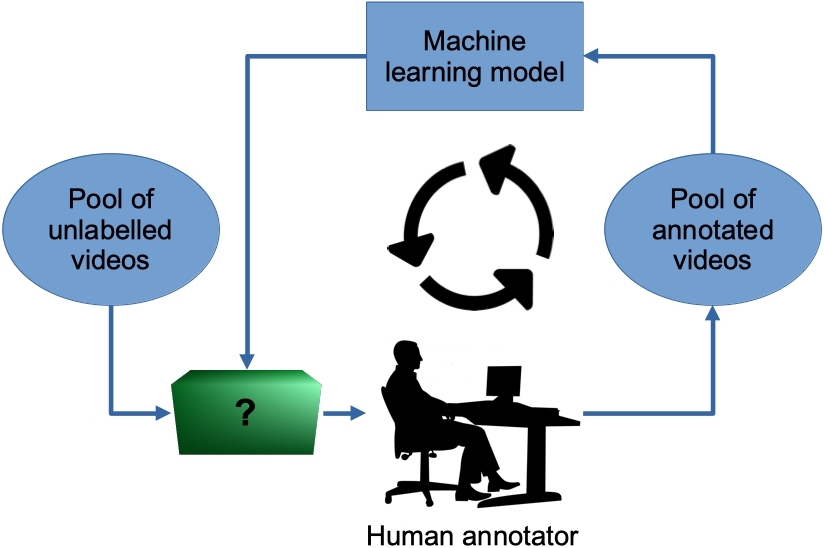

In machine learning, determining the subset of data points (e.g. images, videos) for annotation emerges as a critical decision-making process. The selected data points carry the responsibility of providing a representative snapshot of the diverse scenarios anticipated during real-world testing. Despite the multitude of proposed strategies for data point selection, an enduring observation persists, suggesting that random selection, especially in low-budget scenarios, often proves to be an optimal approach.

Figure 1. The active learning problem.

The overarching objective of this project is to propel active learning strategies tailored specifically for situations characterized by highly limited annotation budgets. This pursuit is particularly relevant in fields with stringent budget constraints, such as medicine.

References

[1] Mahmood et al. Low-Budget Active Learning via Wasserstein Distance: An Integer Programming Approach, ICLR, 2022.

[2] Chen et al. Making Your First Choice: To Address Cold Start Problem in Medical Active Learning, MIDL, 2023.

Closed positions

-

PhD project: Few-shot learning for panoptic segmentation

Details

- Primary supervisor: Dr. Garcia Peraza Herrera

- Secondary supervisor: Dr. Sophia Tsoka

- Funding type: 4-year K-CSC

- Start date: October 2023

Project overview

Deep learning faces challenges, including the need for extensive training data and the problem of forgetting previously learned tasks when exposed to new data. To address these issues, we focus on few-shot learning, adapting models to new classes with limited training samples, and incremental learning techniques. Our current project aims to enhance few-shot, class-incremental, panoptic segmentation, primarily in surgical video analysis but applicable to broader vision tasks, including object detection and image classification.